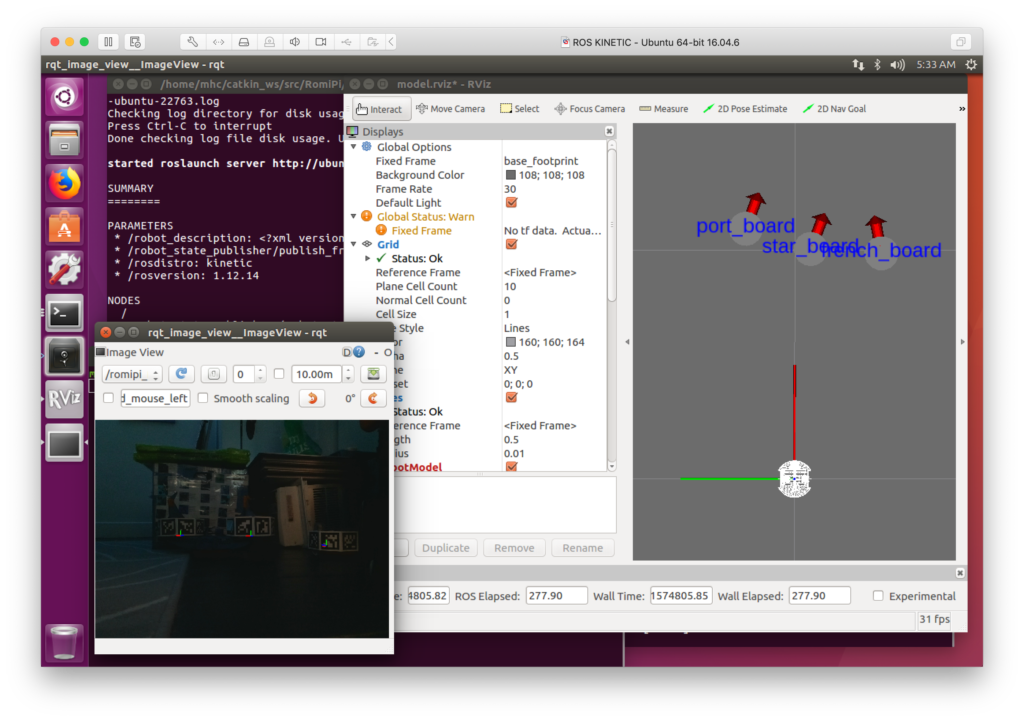

The RomiPi system uses the OpenCV standard ArucoTag system for indoor localization. Below is a picture of the markers representing the positions of robots Star, Port and French, relative to the RomiPi Jiffy. Additionally, the image shows the stream of the robot-mounted camera that the fiducial markers were extracted from. This is all possible because I finally got around to writing an RVIZ marker generation service.

Sounds great! Why the awkward title?

Visualization looks great in a still, but now check the video of the same scenario.

In the video, Port, Star, and French appear to be bouncing all over the place in the visualizer. In reality, they are still relative to the camera-robot, Jiffy. Jiffy calculates its navigation targets relative to these jittery fiducials. Now, Jiffy’s navigation targets jump around also!

Next Steps

No solution for this yet, but my guess is that this is the time to start feeding into a world-model builder. I will probably start with the SLAM tools provided with the Turtlebot3 and then move from there if necessary.

- More light

- Bigger Fiducials

- Improved Fiducial Board Placement

- SLAM Integration