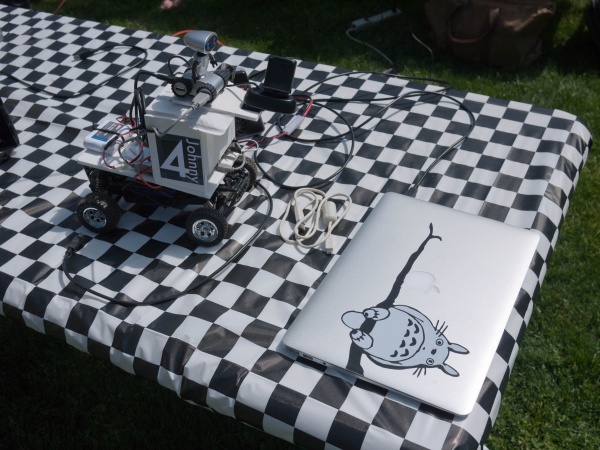

This year I entered the 2012 CMU MOBOT mobile robot competition. I had a nice finished physical robot and a capable vision system but I didn’t finish in time to get it working well. Here I will document my entry for this year and discuss my plans for tuning the vision system for next year.

Me holding Johnny with his makeshift light shield before the second round of the competition. Follow below for more details.

Robot platform

I built my robot using a 1:12 scale RC car as the chassis. The car was a buggy design and lacked capability for supporting the heavy weight of the vision system and batteries. To correct this I added small spacers to the coil over spring suspension to fully compress the springs. I lost suspension capability but the robot stopped tipping over!

The frame of the robot made a great platform for attaching the body that included space for mounting the vision system and the extra batteries. I made that body out of foam-core glued together and bolted to the original RC car frame. Zip-ties further stabilized the connection between frame and the body.

Weight was also an issue for propelling the robot. At low speed the motors torque was not high enough to get the robot started. Open-loop control limited my ability to increase torque without incurring excessive speed. Another issue I encountered with open-loop speed control was that the steep hills caused the robot to race ahead too quickly and I was worried about toppling in the turns.

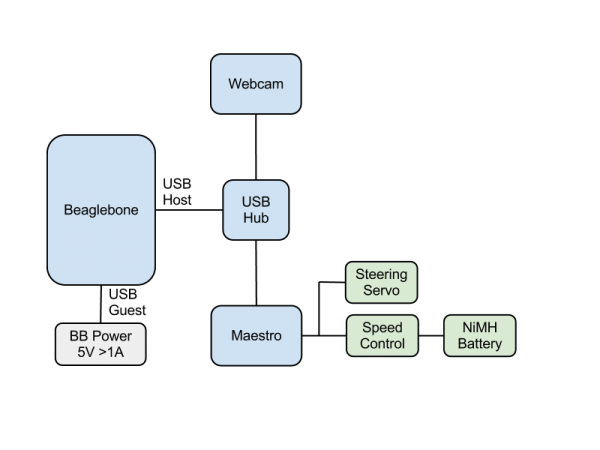

Above is the block diagram for Johnny 4. I used the BeagleBone as the Rasberry Pi was not yet in public release. I may switch for next year as I would prefer to use a Ubuntu distribution and the version I had this year was not great. The Ubuntu distribution had driver issues with the two different Logitech cameras I purchased. Hopefully the community will have improved these by next years competition. Wifi drivers were also an issue. The drivers for my USB Wifi were not installed by default in the distribution and had to be had compiled and installed with limited function. Further I had to update the kernel manually, which always sucks.

I used OpenCV for control using the python wrapper. I don’t remember right now which driver I used but I will look that up next year and update here. Itworked but had issues with delaying frames and occasionally just stopping working. To solve the frame delaying issues I had to request 5 frames for every frame I processed to flush out the frame queue. Debugging on the BeagleBone with no video was tedious as I had to generate a video output and copy it to the development machine to viewing. A Rasberry Pi with it’s integrated graphics hardware might help greatly.

Ultimately the project failed because I had not accounted for the extreme shadowing at the high-noon competition time. The robot split it’s view into shadow and not-shadow. The not-shadow being misinterpreted as the track’s ‘line” and it just veered sharply off the course. I tried to fashion a makeshift light-shield but it was ineffective. Next year I will collect data in practice and account for shadowing using vision techniques.

Next steps will include collecting training vision data, including a map of the course in my controls and possibly a chassis upgrade to move all this weight.

This project is in progress. Hopefully I’ll complete more in time for the 2013 competition!